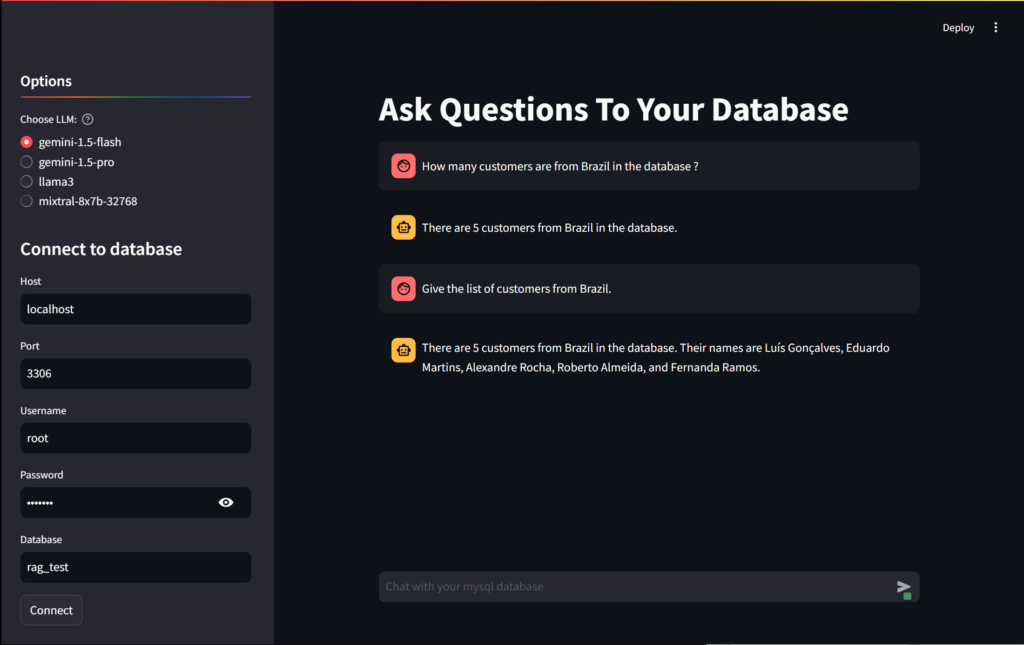

In today’s data-driven world, extracting meaningful information from databases quickly and efficiently is crucial. But what if you could simply ask your database questions in plain English and get answers back in natural language?

What’s the Big Deal?

Imagine you’re a business analyst who needs to pull data from a complex database. Instead of wrestling with SQL syntax, you could simply type:

“How many customers do we have in Brazil?”

And get an instant, human-readable response:

“Based on the database records, there are 5 customers from Brazil.”

This isn’t science fiction – it’s the reality of using LLMs to transform natural language into precise SQL queries and then interpreting the results.

The Tech Behind the Magic

SEO Tanvir Bd’s project leverages several cutting-edge technologies:

- LangChain: This powerful Python framework orchestrates the entire process, from handling user input to managing the LLMs and database interactions. Learn more about LangChain’s SQL capabilities

- Multiple LLM Options: The project supports various language models, including:

- Gemini 1.5 Flash

- Gemini 1.5 Pro

- LLaMa 3

- Mixtral 8x7B 32768

- Streamlit: Provides an intuitive, web-based user interface.

- MySQL: A robust relational database to store and retrieve data.

How It All Comes Together

- User Input: The user types a question in natural language.

- LLM Translation: An LLM converts the question into a SQL query.

- Database Query: The generated SQL is executed against the MySQL database.

- Result Interpretation: Another LLM pass translates the raw database results into a human-friendly response.

Let’s dive deeper into each of these steps and see how they’re implemented in the code.

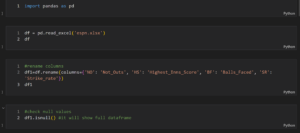

Breaking Down the Code

Let’s explore the key components of SEO Tanvir Bd’s implementation:

Setting Up the Environment

The project starts by importing necessary libraries and setting up the environment:

| import streamlit as st from langchain_community.chat_models import ChatOllama from langchain_community.utilities import SQLDatabase from langchain_core.prompts import ChatPromptTemplate from langchain_groq import ChatGroq from langchain_google_genai import ChatGoogleGenerativeAI from langchain_core.output_parsers import StrOutputParser from dotenv import load_dotenv load_dotenv() |

This setup ensures all the required components are available, including Streamlit for the UI, LangChain for LLM interactions, and database connectivity.

LLM Selection

The project offers flexibility in choosing the LLM:

| def get_llminfo(): st.sidebar.header(“Options”, divider=’rainbow’) tip1=”Select a model you want to use.” model = st.sidebar.radio(“Choose LLM:”, (“gemini-1.5-flash”, “gemini-1.5-pro”, “llama3″,”mixtral-8x7b-32768”, ), help=tip1) return model |

This function creates a user-friendly dropdown in the Streamlit sidebar, allowing users to select their preferred LLM.

Database Connection

The connectDatabase function establishes a connection to the MySQL database:

| def connectDatabase(username, port, host, password, database): mysql_uri = f”mysql+mysqlconnector://{username}:{password}@{host}:{port}/{database}” st.session_state.db = SQLDatabase.from_uri(mysql_uri) |

This function uses LangChain’s SQLDatabase utility to create a connection, storing it in Streamlit’s session state for easy access throughout the application.

The Heart of the System: LLM-Powered Query Generation

The getQueryFromLLM function is where the magic happens:

def getQueryFromLLM(question,model): |

This function:

- Creates a prompt template that includes the database schema and example queries.

- Selects the appropriate LLM based on the user’s choice.

- Uses LangChain to create a processing chain: prompt → LLM → output parsing.

- Invokes the chain with the user’s question and database schema.

- Post-processes the LLM’s response to extract the clean SQL query.

Executing the Query and Interpreting Results

Once we have the SQL query, the next steps are to run it against the database and interpret the results:

| def runQuery(query): return st.session_state.db.run(query) if st.session_state.db else “Please connect to database” def getResponseForQueryResult(question, query,model, result): template2 = “””below is the schema of MYSQL database, read the schema carefully about the table and column names of each table. Also look into the conversation if available Finally write a response in natural language by looking into the conversation and result. {schema} # … (example responses omitted for brevity) your turn to write response in natural language from the given result : question: {question} SQL query : {query} Result : {result} Response: “”” prompt2 = ChatPromptTemplate.from_template(template2) # … (LLM selection logic) chain2 = prompt2 | llm response2 = chain2.invoke({ “question”: question, “schema”: getDatabaseSchema(), “query”: query, “result”: result }) return response2.content |

These functions:

- Execute the SQL query against the connected database.

- Take the raw query results and pass them back to an LLM.

- Use a carefully crafted prompt to guide the LLM in generating a natural language response.

- Return the human-friendly interpretation of the database results.

Bringing It All Together with Streamlit

The Streamlit UI ties all these components together into a user-friendly interface:

| st.set_page_config( page_icon=”:speech_balloon:”, page_title=”Chat with MYSQL DB”, layout=”centered” ) model = get_llminfo() # … (database connection UI elements) question = st.chat_input(‘Chat with your mysql database’) if question: if “db” not in st.session_state: st.error(‘Please connect database first.’) else: st.session_state.chat.append({ “role”: “user”, “content”: question }) query = getQueryFromLLM(question, model) result = runQuery(query) response = getResponseForQueryResult(question, query,model, result) st.session_state.chat.append({ “role”: “assistant”, “content”: response }) for chat in st.session_state.chat: st.chat_message(chat[‘role’]).markdown(chat[‘content’]) |

This code:

- Sets up the Streamlit page layout and LLM selection.

- Provides a chat-like interface for user input.

- Orchestrates the entire process: question → SQL generation → query execution → result interpretation.

- Maintains a chat history for a conversational experience.

Why This Matters

SEO Tanvir Bd’s project is more than just a cool tech demo. It represents a significant step towards democratizing data access. Here’s why it’s important:

- Accessibility: Non-technical users can now query databases without SQL knowledge.

- Efficiency: Rapidly prototype and test database queries without writing complex SQL.

- Flexibility: Support for multiple LLMs allows for experimentation and optimization.

- Learning Tool: Helps users understand SQL by seeing how their questions translate to queries.

Potential Applications

This technology has wide-ranging applications across industries:

- Business Intelligence: Executives can get quick insights without relying on data teams.

- Customer Support: Reps can quickly access relevant customer data through natural language queries.

- Education: Students can explore databases and learn SQL concepts interactively.

- Research: Scientists can query complex datasets more intuitively.

Challenges and Considerations

While powerful, this approach isn’t without its challenges:

- LLM Accuracy: The quality of SQL generation depends on the LLM’s training and prompts.

- Data Security: Care must be taken to prevent unauthorized data access through clever prompting.

- Performance: Complex queries or large datasets might lead to slower response times.

- Database Schema Changes: The system needs to adapt to evolving database structures.

Future Directions

SEO Tanvir Bd’s project lays a foundation for exciting future developments:

- Multi-database Support: Extend beyond MySQL to other database types.

- Query Optimization: Use LLMs to suggest index creation or query improvements.

- Natural Language Data Visualization: Generate charts and graphs based on natural language requests.

- Conversational Context: Maintain context over multiple queries for more complex analysis.

Conclusion

From Text to SQL Query represents a significant step forward in making databases more accessible and user-friendly. By leveraging the power of LLMs, Streamlit, and LangChain, SEO Tanvir Bd has created a tool that bridges the gap between natural language and structured data queries.

Whether you’re a data scientist, a business analyst, or just someone curious about databases, this project opens up new possibilities for interacting with your data. It’s a prime example of how AI can augment human capabilities, making complex tasks more intuitive and accessible.

As LLM technology continues to evolve, we can expect even more sophisticated and accurate natural language interfaces to databases. The future of data interaction is here, and it speaks your language.

Learn more about LangChain’s SQL capabilities to dive deeper into the technology powering this project.

Ready to try it out? Check out the more to begin your journey into the world of natural language database querying!

Frequently Asked Questions

Q1: What makes this project unique compared to other natural language SQL tools?

A: This project stands out due to its flexibility in using multiple large language models (LLMs) including Gemini 1.5 Flash, Gemini 1.5 Pro, LLaMa3, and Mixtral 8x7B. Users can choose the LLM that best fits their needs or compare results across different models. Additionally, the Streamlit GUI makes it user-friendly and accessible to non-technical users.

Q2: Can I use this tool with my existing MySQL database?

A: Yes! The tool is designed to work with any MySQL database. You just need to provide the connection details (host, port, username, password, and database name) in the Streamlit sidebar. Once connected, you can start querying your database using natural language.

Q3: How do I choose between the different LLM options?

A: Each LLM has its strengths:

- Gemini 1.5 Flash: Fastest for quick queries

- Gemini 1.5 Pro: More powerful for complex queries

- LLaMa3: Open-source option, good for customization

- Mixtral 8x7B: Another powerful open-source option

Choose based on your specific needs for speed, accuracy, or customization. You can also experiment with different models to see which performs best for your particular database and query types.

Q4: Is my data safe when using this tool?

A: The tool runs locally and doesn’t send your data to external servers (except when using cloud-based LLMs like Gemini). However, always ensure you’re using secure database credentials and consider implementing additional security measures if dealing with sensitive data.

Q5: Can this tool handle complex SQL queries with joins and subqueries?

A: Yes, the tool can handle complex queries including joins and subqueries. The LLMs are trained to understand and generate a wide range of SQL operations. However, for very complex queries, it’s important to be clear and specific in your natural language question.

Q6: How accurate are the generated SQL queries and responses?

A: The accuracy depends on the LLM used and the complexity of the query. In general, these models are quite accurate for standard queries. However, it’s always a good practice to verify critical results, especially for complex or unusual queries.

Q7: Can I see the actual SQL query that’s being generated?

A: Yes, the tool prints the generated SQL query to the console. This allows you to verify the query and understand how your natural language question is being interpreted.

Q8: How do I install and set up this tool?

A: To set up the tool:

- Clone the project repository

- Install required Python packages: pip install streamlit langchain mysql-connector-python python-dotenv

- Set up your environment variables for API keys (if using cloud-based LLMs)

- Run the Streamlit app: streamlit run chat_datbase_LLMs.py

Q9: Can this tool work with databases other than MySQL?

A: The current implementation is specific to MySQL, but the underlying technology (LangChain) supports various SQL databases. With some modifications to the code, you could adapt it to work with PostgreSQL, SQLite, or other SQL databases.

Q10: How does the tool handle ambiguous questions?

A: The LLMs are trained to interpret natural language contextually. However, if a question is ambiguous, the tool will make its best guess. For best results, try to be as specific as possible in your questions. If you’re not getting the expected results, try rephrasing your question.

Q11: Can I use this tool for data analysis beyond simple queries?

A: While the primary function is to translate natural language to SQL, you can certainly use it for data analysis. You can ask complex questions that involve aggregations, groupings, and calculations. However, for advanced statistical analysis or machine learning tasks, you might need to combine this tool with other data science libraries.

Q12: Is it possible to extend this tool with custom functions or integrate it with other systems?

A: Absolutely! The modular nature of the code and the use of LangChain make it quite extensible. You could add custom functions to process results further, integrate with data visualization tools, or even extend it to perform actions based on query results.