Are you looking to harness the power of web scraping for job market analysis? You’ve come to the right place! In this guide, we’ll walk you through the process of scraping Indeed.com using two powerful Python libraries: Playwright and BeautifulSoup. Whether you’re a data scientist, market researcher, or just curious about job trends, this tutorial will equip you with the tools to extract valuable insights from one of the world’s largest job sites.

Why Scrape Indeed.com?

Before we dive into the technical details, let’s consider why scraping Indeed.com can be valuable:

- Gain real-time insights into job market trends

- Analyze salary ranges for specific roles or industries

- Track demand for particular skills or qualifications

- Conduct comprehensive job searches across multiple locations

Setting Up Your Environment

Before we begin, make sure you have Python installed on your system. We’ll be using two main libraries for this project:

- Playwright: A powerful tool for browser automation

- BeautifulSoup: A library for parsing HTML and XML documents

To install these libraries, open your terminal and run:

| pip install playwright beautifulsoup4 pandas lxml |

Now that we have our environment set up, let’s move on to the first step: downloading the HTML content from Indeed.com.

Here I discussed this project How To Scrape Indeed.com | HTML Download via Playwright | Parse via BeautifulSoup | Step By Step

Step 1: Downloading HTML with Playwright

Playwright allows us to automate browser interactions, making it perfect for downloading dynamic web content. Here’s how we use it to fetch Indeed.com job listings:

| from playwright.sync_api import sync_playwright import time with sync_playwright() as p: browser = p.chromium.launch(headless=False) context = browser.new_context( user_agent=”Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/129.0.0.0 Safari/537.36″ ) page = context.new_page() page.goto(‘https://www.indeed.com/jobs?q=datascience&vjk=6808c8f348c5f750’) time.sleep(3) # Scroll to load all content last_height = page.evaluate(“document.body.scrollHeight”) while True: page.evaluate(“window.scrollTo(0, document.body.scrollHeight)”) page.wait_for_timeout(2000) # Wait for 2 seconds to load new content new_height = page.evaluate(“document.body.scrollHeight”) if new_height == last_height: break last_height = new_height # Save the content to a file content = page.content() with open(‘content.html’, ‘w’, encoding=’utf-8′) as f: f.write(content) browser.close() |

Let’s break down what this script does:

- We import the necessary libraries and set up Playwright.

- We launch a Chrome browser and create a new context with a custom user agent.

- We navigate to Indeed.com and search for “datascience” jobs.

- The script then scrolls through the entire page to load all job listings.

- Finally, we save the HTML content to a file named ‘content.html’.

This approach allows us to capture the full page content, including dynamically loaded job listings that might not be visible in the initial page load.

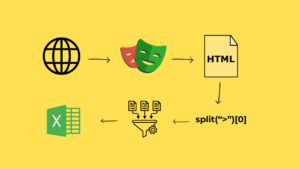

Step 2: Parsing HTML with BeautifulSoup

Now that we have our HTML file, let’s use BeautifulSoup to extract the job information we need:

| from bs4 import BeautifulSoup import pandas as pd with open(‘content.html’, ‘r’, encoding=’utf-8′) as f: content = f.read() soup = BeautifulSoup(content, features=”lxml”) listings = soup.find_all(‘td’, class_=’resultContent’) total_data = [] for listing in listings: title = listing.select_one(‘span[title]:not([title= False])’).get_text() company_name = listing.select(‘[data-testid=”company-name”]’)[0].get_text() company_location = listing.select(‘[data-testid=”text-location”]’)[0].get_text() total_data.append([title, company_name, company_location]) df = pd.DataFrame(total_data, columns=[“Title”, “Company Name”, “Company Location”]) print(df) # Uncomment to save to file: df.to_csv(“indeed_jobs.csv”, index=False) df.to_excel(“indeed_jobs.xlsx”, index=False) |

This script does the following:

- We read the HTML file we saved earlier.

- We create a BeautifulSoup object to parse the HTML.

- We find all job listings using the ‘resultContent’ class.

- For each listing, we extract the job title, company name, and location.

- We store this data in a pandas DataFrame and print it.

- Optionally, we can save the data to a CSV or Excel file.

Understanding the BeautifulSoup Selectors

- select_one(‘span[title]:not([title= False])’): This selects the first span element with a title attribute that isn’t False, which corresponds to the job title.

- select(‘[data-testid=”company-name”]’): This finds elements with the data-testid attribute set to “company-name”.

- select(‘[data-testid=”text-location”]’): Similarly, this finds elements containing the job location.

These selectors are crucial for accurately extracting the information we need from Indeed’s HTML structure.

Understanding the Alternative ways to get same output

I can write the code to get the title text in more 4 ways. Let discuss it

| title = listing.select_one(‘span[title]:not([title=””])’).get_text() title = listing.select(‘[title]’)[0].get_text() itle = listing.find(‘span’,{‘title’:True}).get_text() title = listing.select_one(‘span[title]’).get_text() |

All these lines are used to extract the text content of an HTML element with a title attribute using BeautifulSoup, a Python library for web scraping.

| title = listing.select_one(‘span[title]:not([title=””])’).get_text(): |

- select_one(‘span[title]:not([title=””])’): This CSS selector finds the first <span> element that has a title attribute which is not empty.

- get_text(): This method extracts the text content from the selected element.

| title = listing.select(‘[title]’)[0].get_text(): |

- select(‘[title]’): This CSS selector finds all elements with a title attribute.

- [0]: This selects the first element from the list of elements found.

- get_text(): This method extracts the text content from the selected element.

| title = listing.find(‘span’, {‘title’: True}).get_text(): |

- find(‘span’, {‘title’: True}): This method finds the first <span> element that has a title attribute.

- get_text(): This method extracts the text content from the selected element.

| title = listing.select_one(‘span[title]’).get_text(): |

- select_one(‘span[title]’): This CSS selector finds the first <span> element with a title attribute.

- get_text(): This method extracts the text content from the selected element.

In summary, all these lines aim to extract the text from a <span> element with a title attribute, but they use slightly different methods and selectors to achieve this. The first line ensures the title attribute is not empty, while the others do not have this check.

Conclusion

Scraping Indeed.com using Playwright and BeautifulSoup can provide valuable insights into job market trends. By following this guide, you’ve learned how to:

- Use Playwright to download HTML content from dynamic web pages.

- Parse HTML with BeautifulSoup to extract structured data.

- Handle pagination to scrape multiple pages of results.

- Address common issues in web scraping projects.

Remember to use these techniques responsibly and in compliance with Indeed’s terms of service. Happy scraping!